How DeepNude AI Generators Work (And Why They’re Banned on Most Platforms)

In the fast-evolving world of artificial intelligence, few technologies have sparked as much controversy as the deepnude AI generator. Originally developed as a tool claiming to use machine learning for photo editing, it quickly became notorious for its unethical applications. While many AI tools are designed to enhance creativity or productivity, the deepnude AI generator crossed a serious moral and legal boundary—using AI to create fake, explicit images of real people without their consent.

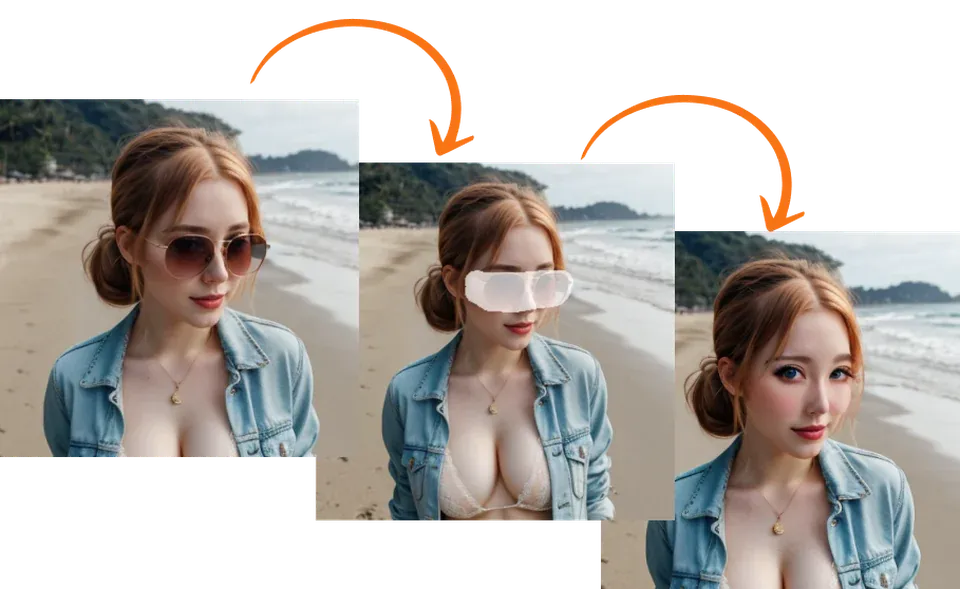

So, how does a deepnude AI generator actually work? At its core, it relies on deep learning, a subset of AI that uses neural networks to identify and generate patterns in images. Similar to how deepfake technology can create realistic videos of people saying or doing things they never did, a deepnude AI generator uses a database of images to train algorithms to “predict” what a person’s body might look like beneath clothing. It then overlays synthetic elements onto the original photo to produce what appears to be a nude image. The process is disturbingly efficient, often taking just seconds to produce a manipulated result that looks authentic to the untrained eye.

The technology behind the deepnude AI generator is a combination of generative adversarial networks (GANs) and image inpainting. GANs work by having two neural networks compete with each other—one generating fake images and the other trying to detect whether those images are real or not. Over time, the generator becomes better at creating realistic visuals, resulting in highly convincing yet completely fabricated content. Image inpainting fills in missing or obscured areas of a photo, allowing the AI to reconstruct parts of an image that were never visible in the original. Together, these systems enable a deepnude AI generator to fabricate content that can deceive even the most critical observer.

While the underlying technology might seem fascinating from a technical standpoint, its implications are deeply troubling. The deepnude AI generator gained immediate backlash after its release because it was weaponized to target women, celebrities, and private individuals alike. Victims often found their altered images circulated online, leading to emotional distress, reputational damage, and even legal challenges. Due to the massive outcry and ethical concerns, platforms like Reddit, Twitter (now X), and Discord quickly banned the distribution or discussion of deepnude AI generator tools. Law enforcement agencies in several countries have also begun considering specific legislation to criminalize AI-generated non-consensual imagery.

The ban on the deepnude AI generator underscores a broader issue in the AI community—how to balance innovation with responsibility. Developers are now urged to follow ethical guidelines that ensure AI is used to empower, not exploit. Many modern AI projects focus on transparency, consent, and safeguarding user data, recognizing that trust is the foundation of sustainable technology.

As AI continues to evolve, it’s crucial for users to understand not only what tools like the deepnude AI generator can do but also why they shouldn’t be used. These programs serve as a reminder that technology, no matter how advanced, must always be guided by human values. In the end, the question isn’t just about how powerful AI can become—it’s about how responsibly we choose to use it.